Tiered Moderation

Timeline

2017 - 2020

Role

Lead Product Designer

Team

Community & Safety

A big part of GitHub's Community & Safety team ethos was to build the tools and systems for open source communities to self-govern as they see fit. We set out to create a framework from which we could build a suite of tools to empower. This toolkit was integrated seamlessly on Discussions and Mobile, as well.

Vision

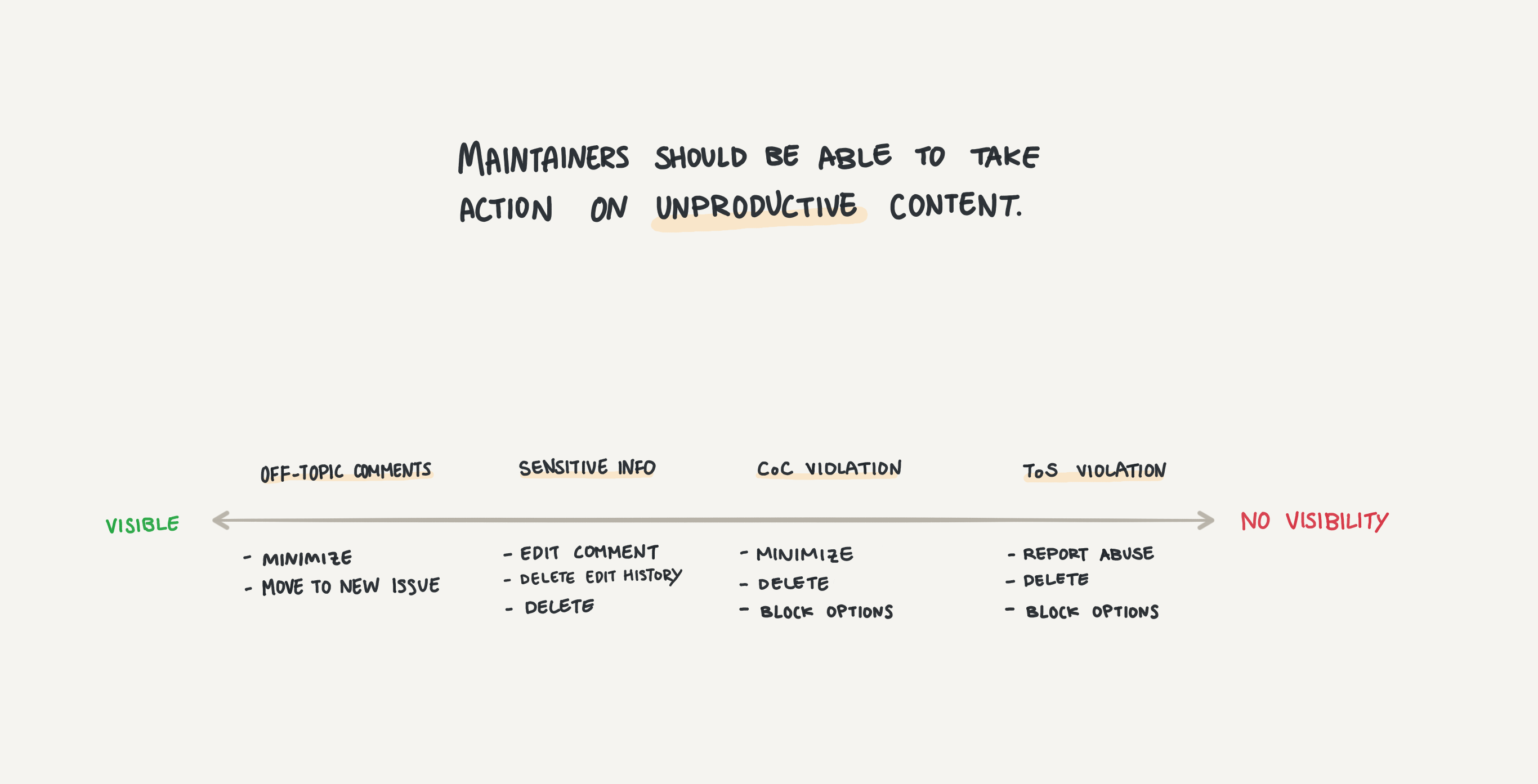

We were confident that a suite of moderation tools would increase the health of communities while decreasing maintainer burnout—especially after talking to many about their moderation pain points.

Design opportunities

Create a system of safety

I took this as an opportunity to establish guidelines for how future designers might include similar safety features in their respective areas. Because we wanted to encourage all teams to take on the responsibility of incorporating safety principles into their work, this felt like a useful framing.

Encourage good behavior

One of the biggest design challenges with building the entire toolkit was trying to encourage good online citizenship at the end of the day. This goes hand in hand with the greater vision of giving a variety of tools of varying power, in order to let each community maintain as they see fit.

Digging deeper into the design ethos of this, I often posed the challenge of, "How might we make it as easy as possible to adhere to moderation best practices?"

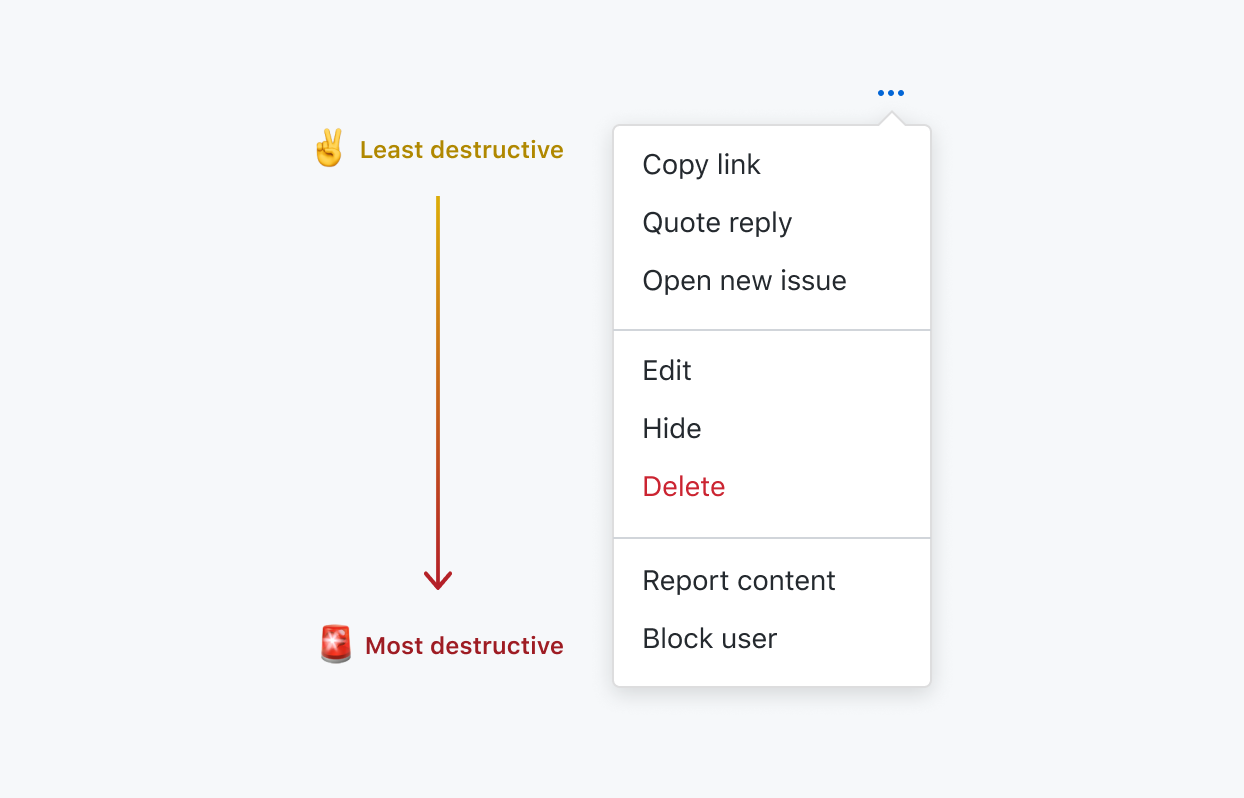

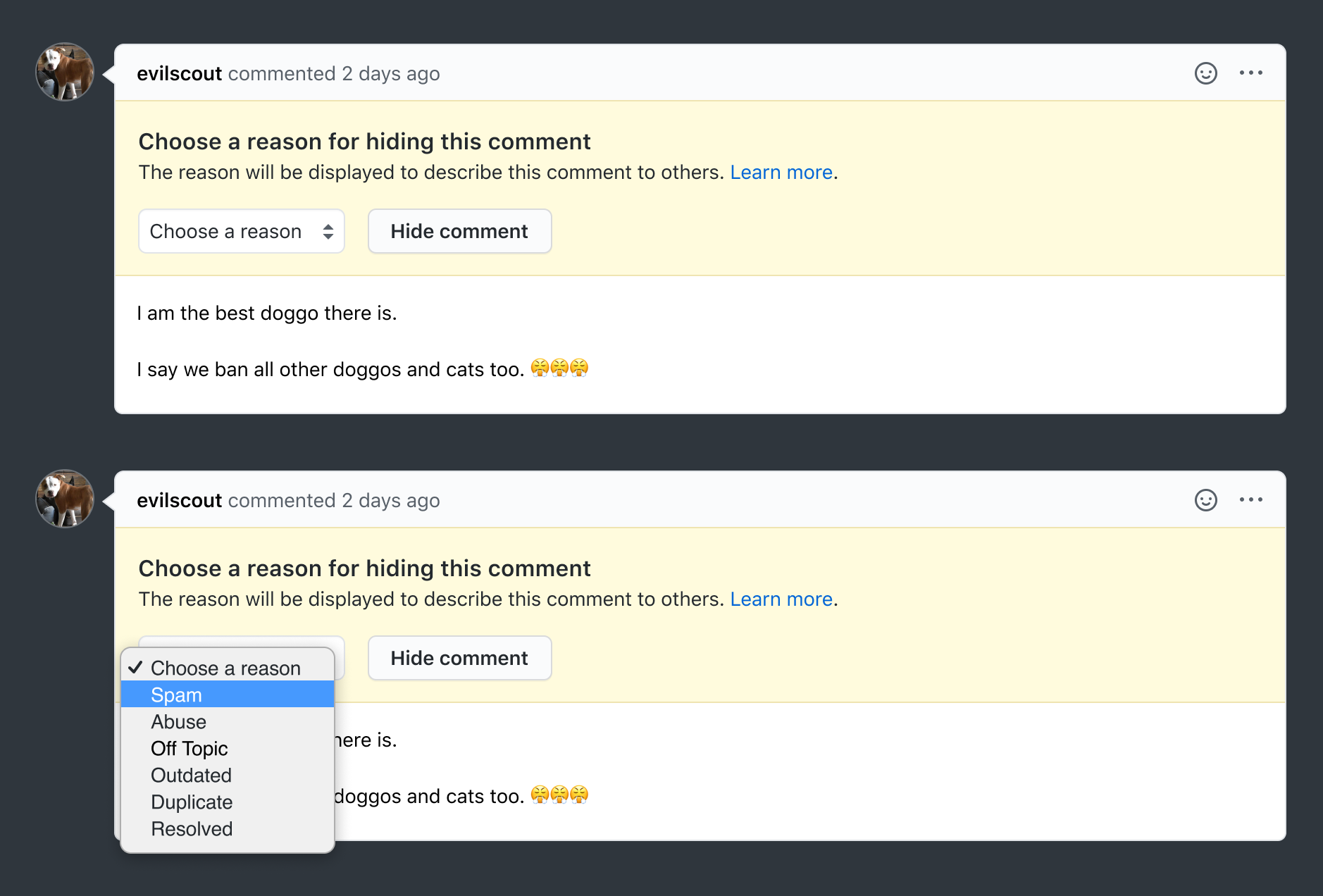

Hide comments

For our least destructive moderation action, we built the "Hide" functionality for content. In order to purse this feature, I worked with the Design Systems team to introduce the "kebab" icon and implement it in the comment header.

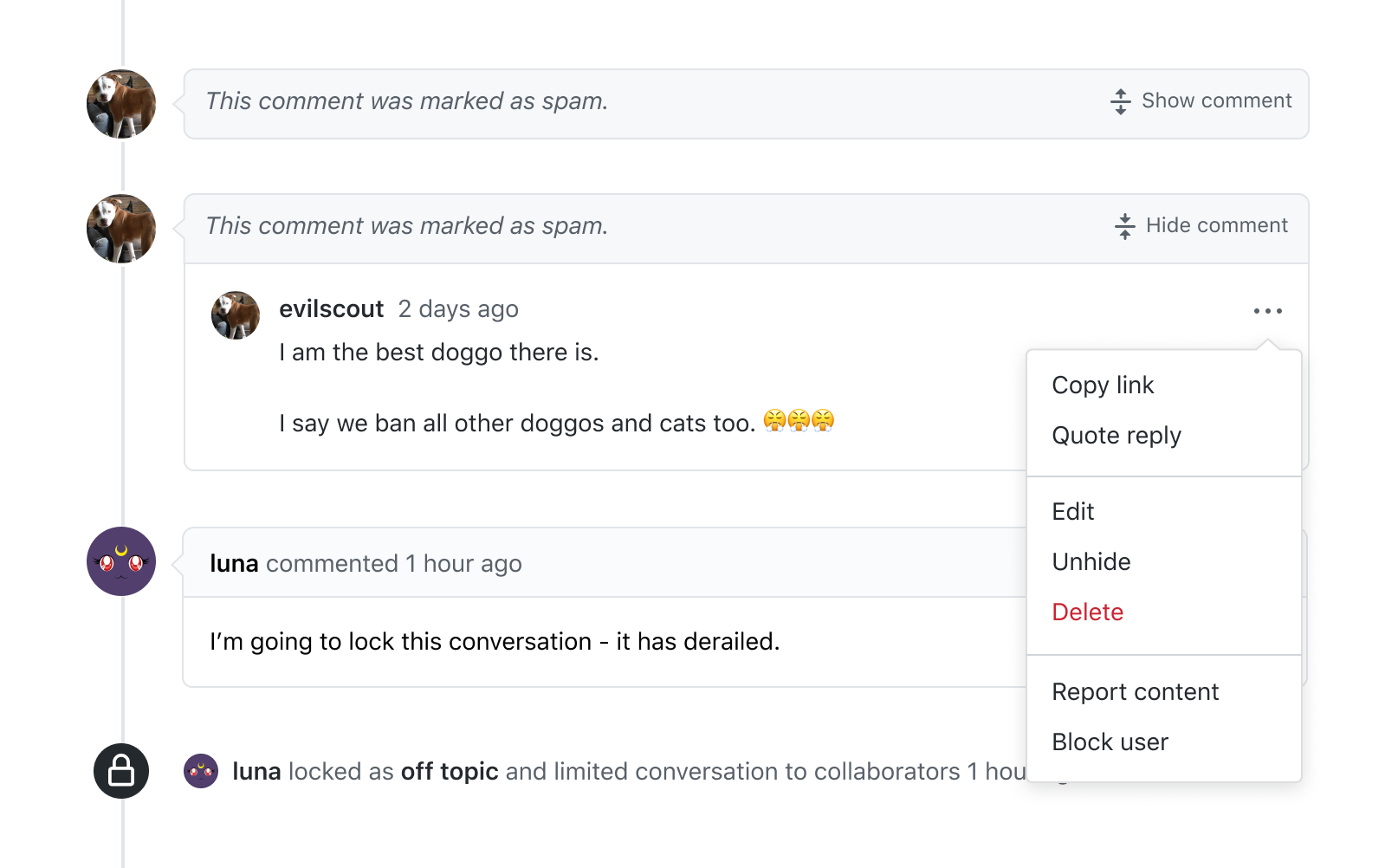

Once a reason is selected and the comment is hidden, it will be reflected in the timeline with a "This comment was marked as REASON" header and a show/hide toggle. This toggle minimizes the vertical real estate a comment takes up in the timeline, and still allows others to opt-in to view the content if they so choose.

This remains one of the most top used content moderation tools on GitHub for its flexibility, as well as transparency into why a piece of content was hidden. Adhering to the principle of "encourage good behavior," we provided a dropdown of the most common reasons for hiding, which we gathered from research sessions. This "reason dropdown" was extended to our Report Content form, as well.

Comment edit history

We added the ability to see who made edits to a comment, view a diff of the edits, and delete any prior edits. This was a fun one and bit longer to explain, so I'll be writing its own post soon!

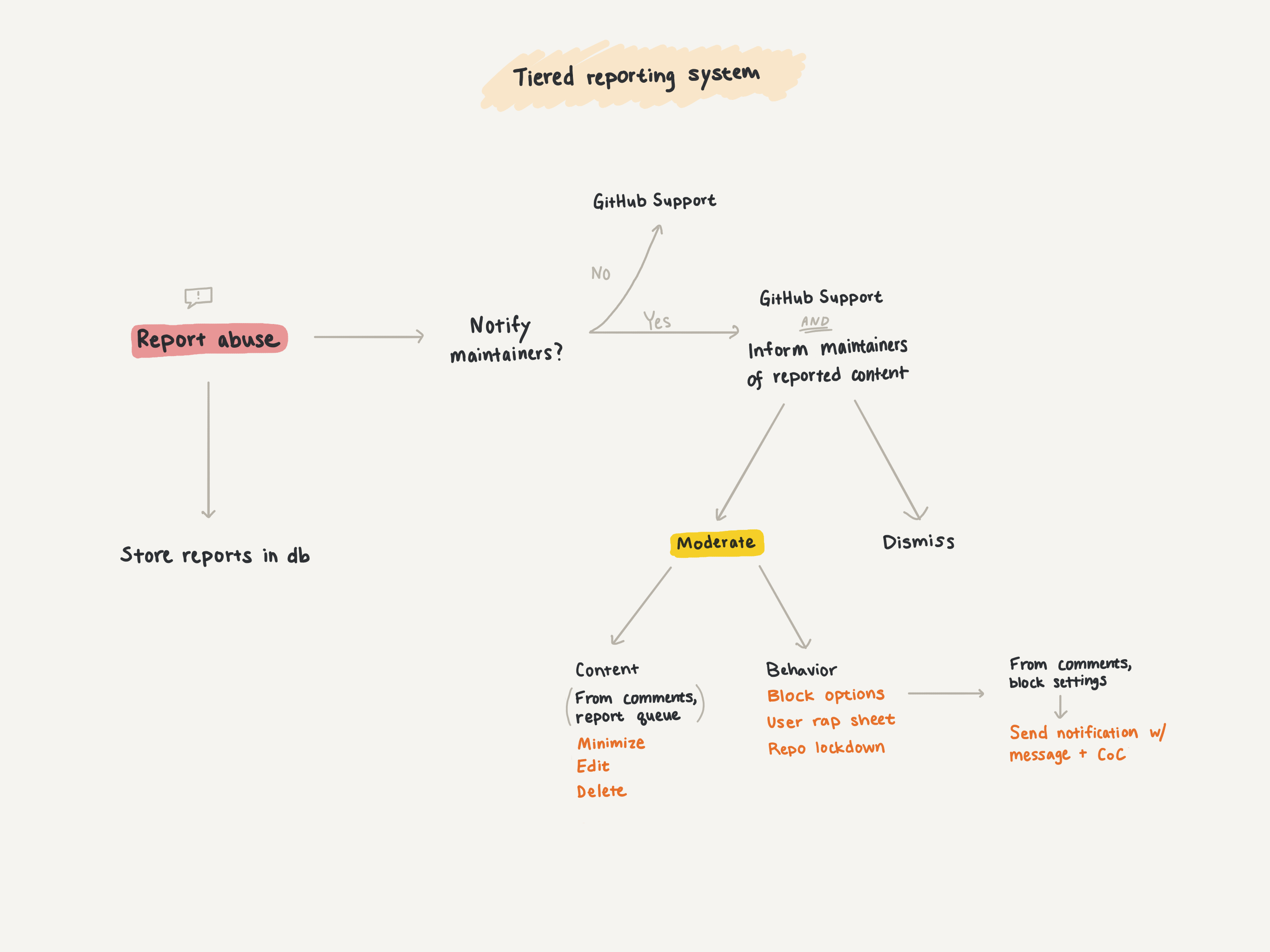

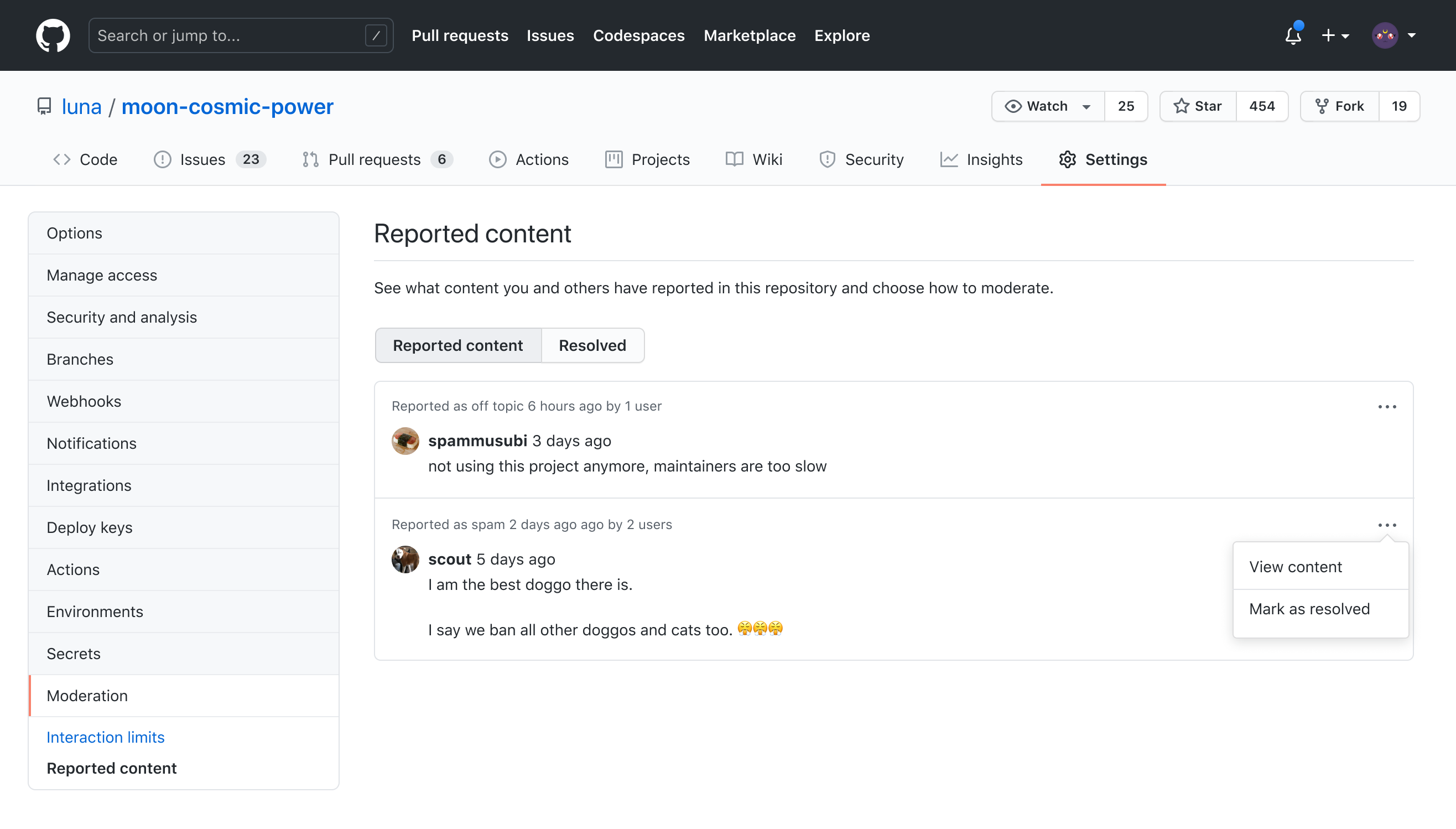

Tiered reporting flow

We made improvements to the reporting flow by making it easier to report specific content (e.g. a comment in an issue), as well as help our Support peers reroute issues to projects maintainers. I worked closely with Support to create a report routing mechanism.

Collaboration with Support

Support takes action on the majority of reports, but often receive reports that are directed to project maintainers. There was no flow to route these reports to the appropriate people on the platform.

After shadowing a Support member and getting a better understanding of what internal tooling we could build, I landed on an internal interface that Support could use to flag content to maintainers.

Maintainers would then see a queue of any flagged content in their repository settings. They could either view content and take any appropriate action, or mark the content as resolved.

Note: as of Dec 19, it's now possible for contributors to choose whether or not to report to maintainers or Support!

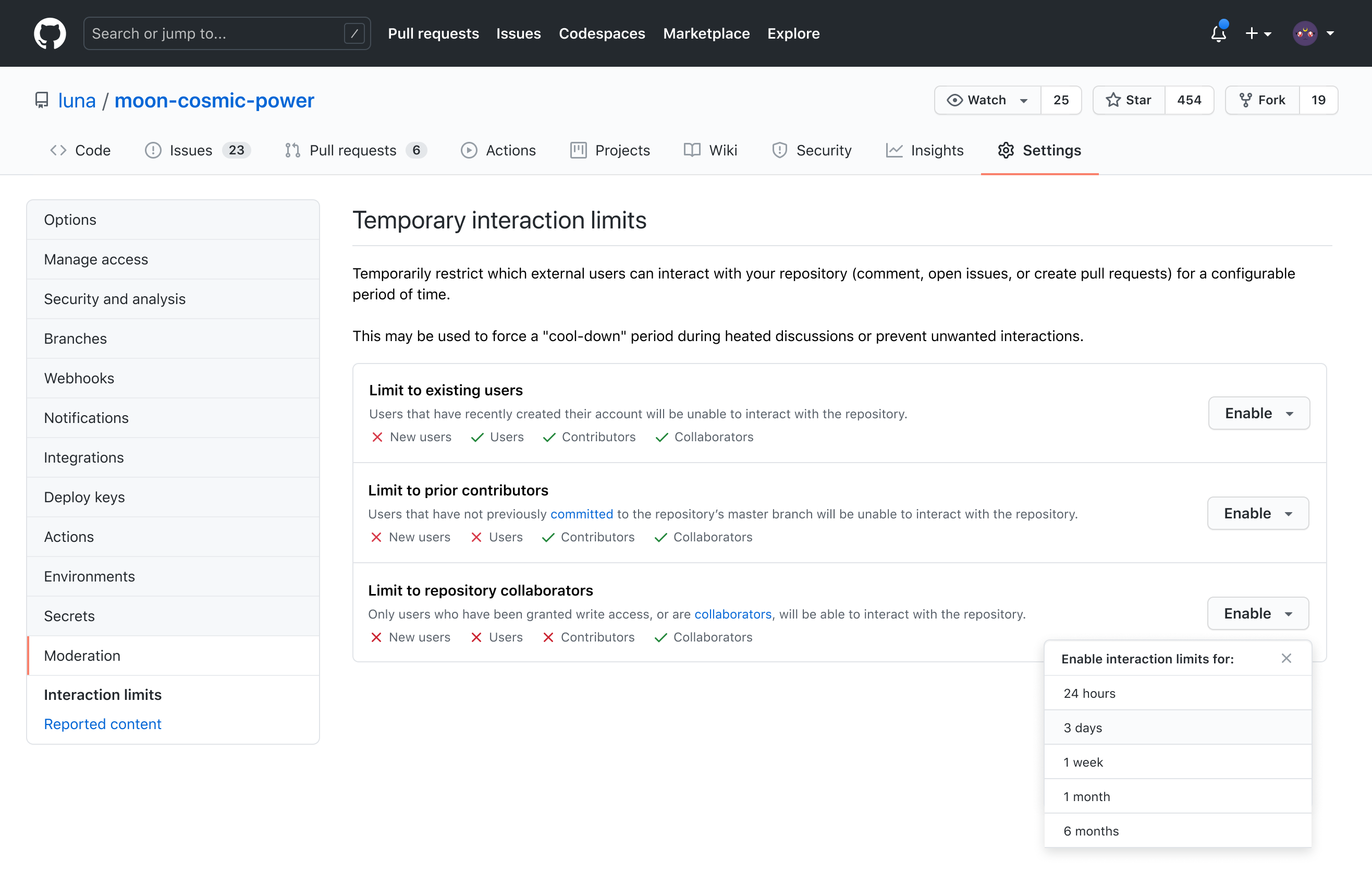

Temporary interaction limits

We recognized that users or organizations would experience a flood of unproductive or harassing content that would take a lot of energy to moderate. As a way to force a cool-down and "stop the bleeding," we built this feature to limit who could interact with a single repository for v0.0. We extended it to all organization repos as a v0.1. I got to use this feature extensively myself when I was experiencing harassment on one of my repositories.

I'm happy to have made even more iterations for, which we dubbed the "greatest subtweet feature." You are now also able to limit interactions on all your personal repositories with a time limit for up to 6 months.

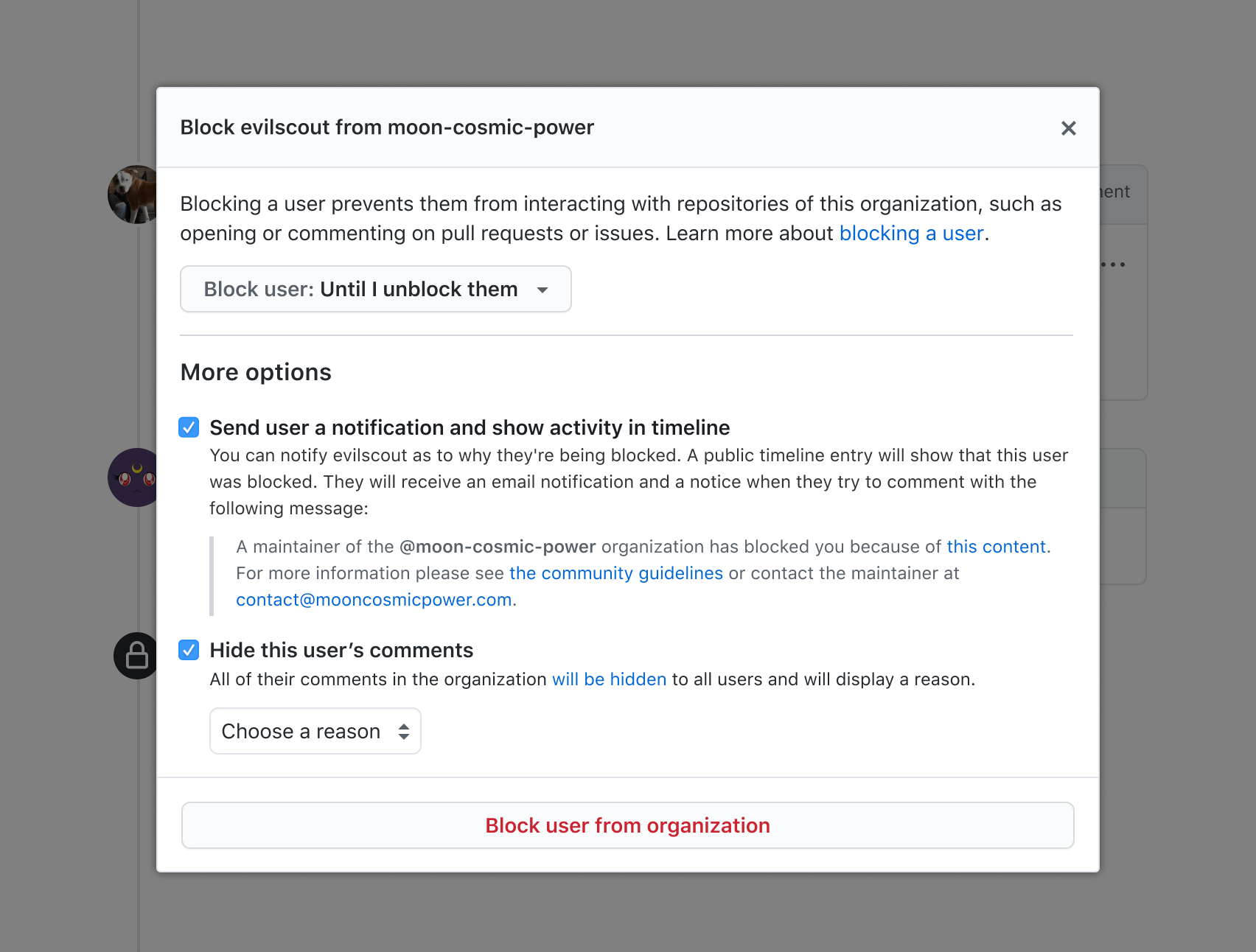

Temporary user blocking for rehabilitation

After speaking to a few maintainers, I was surprised to find that they generally preferred reaching for a rehabilitation process, rather than permanently blocking users from the organization. While this is also due to private DMs happening off GitHub, the general philosophy is to assume good faith of the person behind the screen.

I didn’t want to ban him just because he was having a bad day, but I did want to put him on time out.

Participants seem to use the blocking and reporting mechanisms on GitHub for spammy users, ads/self-promotions, or users who don’t know how GitHub works. For other types of user-to-user conflict or abrasive comments, temporary blocks were more ideal for a cool-down.

We provided a default message you could opt in to sending, as well as a quick way to hide all user's comments. Tying back into the "encourage good behavior" principle, creating a neutral message in the "GitHub voice" helped deflect any retaliation, as well as ease the burden to rehabilitate for maintainers.

GitHub was always a “social” site, although it may not have seen like it because of the focused motivation to collaborate on and gather around code. Building the foundation of moderation tools was crucial to set us up for increasingly social interactions, such as Discussions and the addition of Mobile. With more surfaces to interact with, I’m proud of the breadth of tools we created for many types of communities.